Alata

is a multimedia performance piece playing with ideas of empathy, acceptance,

and the abject through a gradual establishment of rapport between the two performers.

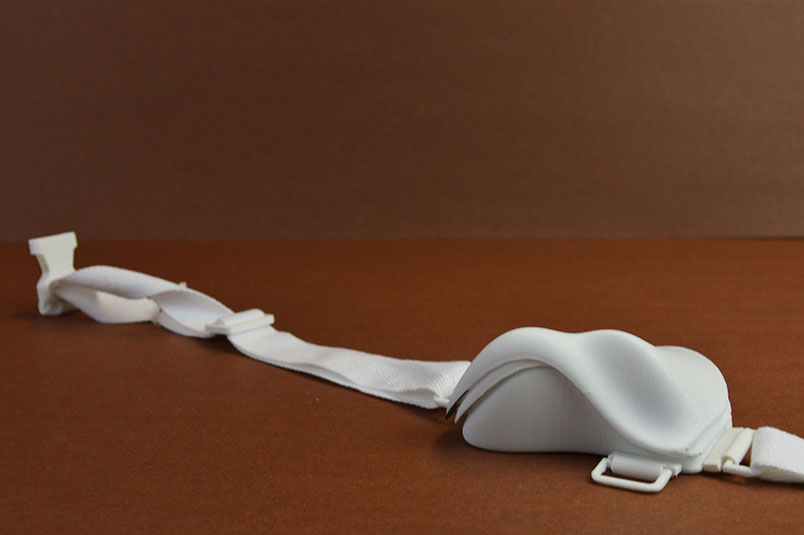

The piece is driven by the eponymous instruments worn on the backs of the performers that model after bone deformities.

Each performer is unable to interact with their own instrument due to its positioning on the back and requires another person who would then interact with the instrument.

The eponymous instruments were created using 3D printed materials, force-sensitive resistors, and Arduino Fio using the XBee for wireless protocol for transmission to a laptop.

The instruments communicate sensor data to Max/MSP, which then maps parameters using machine learning provided through Wekinator for a better, more organic representation of gestures.

I was inspired to create the piece by the frustration of electronic instruments being presented as a solo-performance instrument, as opposed to one that lends itself to an ensemble playing, which I see as

integral to musical performance and the artistic viability of an instrument.

I wanted to force an interaction between the performers in which they were inclined to communicate to each other using gestures -- quite literally so, especially

considering that touch is the most intimate action that you can perform with another body. There is a sort of an identity transfer between the wearable instrument

and the wearer, which dramatically changes the type and quality of interactions between the performer and instrument. Each performer interacts with the

other's instrument as they would

interact with the body of the other.

By highlighting the importance of the gesture, my aim was for the performers to create an intersubjective space between the performers as well as between the

performers and the audience. Through perception of action, both visual and auditory, the audience relives the experience of the performers through their own bodies.

The piece was made possible with the assistance of the UC Berkeley Invention Lab, with special thanks to Mitchell Karchemsky, Chris Myers, and Kuan-Ju Wu.